Science fiction writers have been exploring the societal implications of human-like robots from time immemorial. The late, great Isaac Asimov suggested, in his series of robot stories, including “The Caves of Steel,” that the introduction of such machines would cause social unrest from workers who would find themselves displace. Dr. Asimov, meet “Stop the Robots.” According to a Sunday story in USA Today, a group of a couple of dozen protestors showed up at the SXSW tech and entertainment festival in Austin, Texas to express their rage against the machine.

As near as can be determined, the “Stop the Robots” protestors are less worried about robots taking their jobs than robots taking over the world. They are more afraid of the Terminator than they are of R. Daneel Olivaw. The paranoia against artificial intelligence and its implications has afflicted some high profile celebrities, including physicist Stephen Hawking and space entrepreneur Elon Musk.

Fear of technology and its implications has been around for centuries, ever since a legendary figure named Ned Lud smashed a weaving machine in the late 18th Century, creating the word “Luddite” to label people who are mindlessly opposed to technology. Contrary to such fears, the advance of technology has been a boon to humankind, improving lives and creating more jobs than they destroy.

Even so, concern about artificial intelligent machines and their relationship to human is not frivolous. Asimov wrestled with the problem, creating the Three Laws of Robotics that he suggested should be incorporated into every such device.

1. A robot may not injure a human being or, through inaction, allow a human being to come to harm.

2. A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

A future in which Terminators that look like Arnold Schwarzenegger, controlled by Skynet, chasing the last remnants of humanity across a blasted landscape is certainly plausible. “Battlestar Galactic” had a similar story of artificial beings called Cylons attempting to exterminate humans. A lesser known scenario, popularized by a movie called “Colossus: The Forbin Project,” has an AI computer taking over the world for our own good.

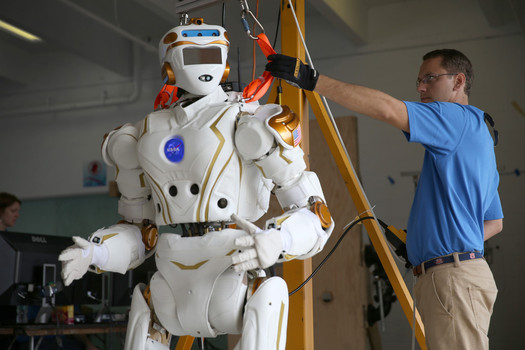

History suggests, however, that a third future, in which a benign and helpful Commander Data of “Star Trek” fame becomes humanity’s assistant, and even friend will come to past. Indeed, such a creation would alter what it means to be human, expanding it to include beings made of metal and composite materials, as well as flesh and blood.